I recently worked on an app that was loading from several hundred gigabytes of CSVs, and attempting to perform expensive transformations on these files in Qlik Sense. Normally this isn't a problem, but due to the way the transformation was written, the result was a saturated server...and I found myself reflecting what "Big Data" means to different people (and to myself).

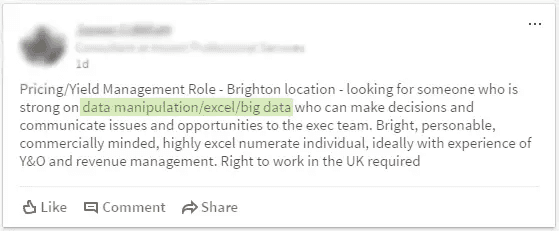

A recruiter's post on LinkedIn also made me chuckle, as it highlights the disparity between definitions well. From my view of big data, I doubt that someone working in that field is likely to be interested in a role where one of the three core job skills is Excel...

Strong in Data Manipulation/Excel/Big Data - so which is it?

My observation is that there are two camps - one side that classifies using the "V's", and another using an altogether simpler definition!

Is it too big to open in Excel?

I like this one.

From a typical end-user perspective, it makes a lot of sense, and is very easily defined by the toolset available to users. We don't have to limit the definition to Excel, but given my recent experience with the large CSV files breaking the tools in my toolbox (the mis-use of Hadoop and how big is big data are interesting reads relevant to this comment) it's easy to see some fairly clear groups form:

-

Reaching the limit of Excel at 1M rows (let's not even mention how poorly this performs if you've got anything more than a simple calculation) - this is the limit for most business users, including more numerically savvy competencies like Accountancy or Management Consulting

-

Reaching the limit of your chosen BI visualisation tool (typically limited by server resources) at up to 1B rows - this appears to be the limit for most Business Analyst and BI developer teams in normal organisations

-

Moving beyond 1B rows takes us into an area commonly tagged as Data Science - and specialist tools like Hadoop and Impala (or as we now hear, into managed cloud solutions)

Perhaps it's better to refer to these limits as a team's "data capacity", but fundamentally, we can define big data as:

-

The volume of data that cannot fit into RAM on a commodity computer (e.g. a typical office PC)

-

And/or the volume at which specialist tools and/or techniques are required to analyse that data

I think this is the practical definition, and it kills the buzz-word a bit. The scientific definition is somewhat more detailed.

Does it conform to the three/four/five V's?

This is what you'll see on most courses, lectures and whitepapers on the subject - and is a more science-based definition. Look to this infographic by IBM big data hub, content on Wikipedia, and many, many more articles and threads discussing this approach.

So, what are the 3-5 V's?

The four V's (three from Gartner, five from IBM - and matched by consultancies like McKinsey, EY, etc)

-

Volume (Gartner, IBM) - the scale of data. Typically this is presented with very large numbers - terabytes to petabytes of data, upwards of trillions of rows...

-

Velocity (Gartner, IBM) - the speed of data, or the pace at which it is now arriving, being captured and analysed

-

Variety (Gartner, IBM) - the diversity of data; from across structured, semi-structured and unstructured formats. Fed from social media, images, JSON files, etc

-

Veracity (IBM) - the certainty (or uncertainty) in data. Many of these new data sources may have poor quality, incompleteness - making analysis with fitted models incredibly challenging. It also raises the question - what should we trust?

-

Value (IBM) - the ability to generate value through the insights gained from big data analysis. This isn't a core element of most definitions, but it's interesting to see it included as it implies there should be business value before investing in a big data solution

Some of the others V's that I've seen:

-

Variability - the velocity and veracity of data varies dramatically over time

-

Viability - likely linked to veracity and value

-

Visualisation - perhaps ease of analysis via comparative or time based analysis rather than hypothesis driven approaches?

Where to go next

It is likely to be quicker and easier to make large data sets accessible in traditional BI tools by providing slices of data from specialist tools like Impala (from Hadoop). The level of aggregation in the data passed to the BI tool is then dependent on capacity of the system, and the business appetite to use techniques like ODAG and live queries in production.

Other areas often associated with Big Data such as IOT and AI (or machine learning) will require different techniques to capture, process and present data back to users. Interestingly, a number of articles this year report the decline of tools like Hadoop, maturity of the big data term in organisations, and the use of streaming data.

2017/05/03 - EDIT: Here's another mid-2017 article which again mentions a decline in tools like Hadoop and a increase in migration to the cloud, which is very relevant to this post!

Also relevant is this week's Google Cloud Next event in London - I've been listening in and it has a huge focus on data engineering, data science, and machine learning in Google Cloud.